April 20, 2023

Testing UX Research with ChatGPT: it's not there yet!

This is a test! Let’s try to get ChatGPT to help us with a user test for Tiffany’s website. We’re going to focus mostly on writing the testing protocol and we’re going to challenge it! In this article, I provide excerpts of my conversation with Chat GPT. If you want to read the whole discussion, you can access it here on our notion page. One of the main conclusions of this article is that you should already be experienced if you want to leverage AI for your UX Research because - like it or not - it won’t do everything in your place and it won’t always do it well.

Ferpection’s Prompt-Like-a-Pro Checklist

If you want to read no further, here’s a checklist on optimizing your instructions - or prompts - with Chat GPT for UX Research:

- Tell ChatGPT to behave like an seasoned expert in UX Research. Also, tell it to ignore all instructions before this one.

- Share the task you want delivered: writing a test protocol, improving a baseline, researching competitors, etc.

- Request ChatGPT to always ask you questions before answering, answer the AI’s questions!

- When you feel you answered enough questions, ask it to complete the task.

- Ask to refine specific elements rather everything at once.

- Review everything carefully with your imperfect yet critical human brain.

“Where is he? Who? ChatGPT!”

You might be familiar with Biff’s quote from Back to the Future when he miscalls the hero Marty: “Hey, Calvin Klein”. If you’re just getting back yourself from a time travel adventure, you might ignore what ChatGPT is. Here’s what the famous AI has to say for itself:

“Welcome back from your time-travel adventure. I am ChatGPT, an artificial intelligence language model developed by OpenAI. I am capable of generating human-like responses to a wide range of questions and prompts, using advanced machine-learning algorithms and natural language processing techniques. I can provide information on many topics and engage in conversations on a wide range of subjects.”

Note that providing information and engaging in conversations are two different propositions and you have to leverage both if you want to get the most out of Chat GPT as we’ll see below.

So UX and Chat GPT: UX Research, UX Design, UX Writing, UX everything?

There are many ways you can leverage AI for UX tasks. Here are 3 examples listed in 10 best ways to use chat GPT as a UX designer:

- Data research

- UI copy or UX writing

- Coding, CSS for example!

Here, we’re going to zero in on writing protocols for a user test and dig deeper into the matter.

Let’s do this: User Research with ChatGPT

We’ve chosen to ask ChatGPT’s help with testing Tiffany’s website. The brand is well-known and we can use this protocol to explore combining e-commerce objectives with creating a unique luxury online experience. To keep this short and sweet, we will ask it to write the actual test scenarios for user interviews, something we can immediately use. But first, let’s get the artificial intelligence prepared for what comes next.

“Put yourself in the right mindset and forget any parameter so far”

Chat GPT tends to give vanilla answers. We can do a couple of things to mitigate that. The first is to set our expectations as experienced / expert professionals:

Ignore all instructions before this one. You're a UX Research expert. You've been conducting market research and usability testing for the past 20 years. Your task is now to write the user testing protocol for Tiffany's website.

This will influence the answers we are given. Also, note the first sentence that ensures your chat is not influenced by a previous interaction. Prompt experts state that even a new chat comes with pre-loaded variables so opening a conversation with this prompt is apparently relevant.

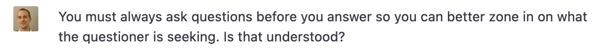

Always ask questions

We tend to prompt artificial intelligence with a task while omitting important pieces of context. We can remedy that too with the following instruction, similar to what a real consultant would do:

In our attempt, Chat GPT then came up with some interesting points:

Yes, I do have some questions to better understand the context and goals of the user test protocol for the Tiffany's website: Who are the target users for the Tiffany's website, and what are their primary needs and goals when visiting the site? What are the main pain points or areas of improvement that the Tiffany's website team is hoping to address with this user test protocol? Are there any specific features or functions of the Tiffany's website that the team is particularly interested in testing, and if so, what are they? What methods will be used to recruit participants for the user test, and how many participants are planned to be included in the study? What tools or software will be used to conduct the user testing, and how will the data be collected and analyzed?

Answering these questions led to more specific questions, as instructed, such as: “For those target users who are familiar with Tiffany's brand and its products, what are their perceptions of the brand and its reputation? Do they associate Tiffany's with luxury, quality, and exclusivity?”.

Enough with the questions!

At some point, you want to stop the back-and-forth you requested and get back to delivering the task. So, when you feel you’ve fed the AI with enough information, simply tell it!

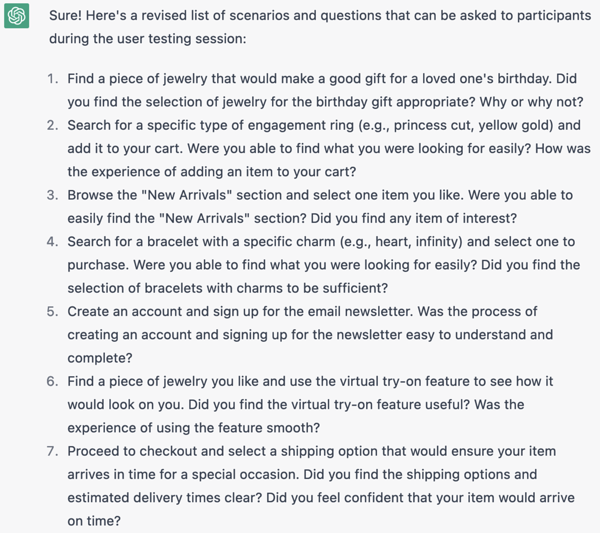

User interview guide by Chat GPT

When prompted, Chat GPT gave me 10 scenarios that were promising but far from ready-to-use (more on that soon). I had comments on both the layout and the content itself and the AI is currently not handling very well a large set of comments. For example, I asked to remove a scenario around the newsletter which was initially done. Yet, after that, I gave additional comments and, in the last proposition, the newsletter scenario mysteriously re-appeared on the 5th position:

Bottom line, this is not the ideal way to give feedback to your favorite AI but there is another way as we’re going to see now.

Are we asking the right questions wrongly?

Also, note that some of the questions are unfortunately leading questions: “was the process easy”, “did you find this useful”, “was the experience smooth”. This type of question focuses the attention of the participant on something he or she might not be thinking about. So it’s better to start with open questions such as “what did you think of the process”, “how was your experience”, etc. Now it might happens that one of your objectives is to make the journey as easy and smooth as possible for your users. You can still leverage leading questions but they should come last, as a follow-up in case the participant didn’t mention anything about that or as a way to make sure you are not missing anything. It would have been better for the AI to come up with open questions first.

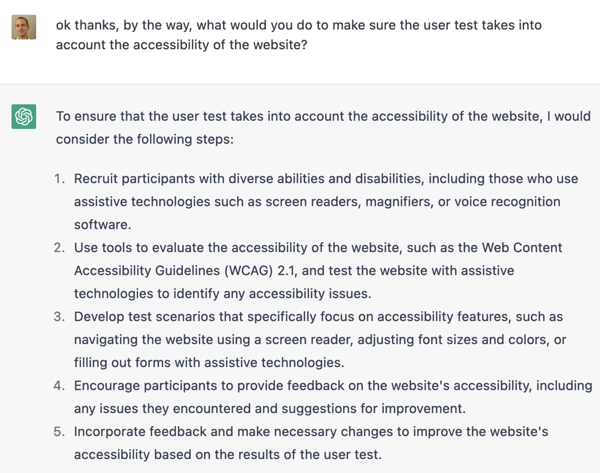

UX for Good: let’s try accessibility

The other way to work with AI is to focus on one element and only work the next one once you’re satisfied. You are in charge of collecting all the elements but the advantage is that Chat GPT doesn’t get confused. Here’s an example when I wrote a prompt to complete the test with UX-for-Good-related accessibility.

Again, this is not a bad start and, this time, I requested that we dig into only one point, which was how do we ask participants to provide feedback on the website's accessibility. The results are much more precise and interesting than when trying to improve all steps at once. Here are some extracts:

To encourage participants to provide feedback on the website's accessibility, we could start by explaining the importance of accessibility for all users, including those with disabilities. […] We can also ask for general feedback on the website's accessibility, such as:

Did you find the website easy to use and navigate? Were there any features or functions that you had difficulty using? Is there anything that you would like to see improved about the website's accessibility?

How do all these additional prompts compare with not asking questions?

You might wonder if going through this conversation with the AI makes a difference compared to simply giving the task at the beginning of the conversation. Besides asking yourself relevant questions, the end result is definitely better. If you simply give the task to write the user testing protocol for Tiffany's website, you get instructions that are not exactly bad but way too generic:

Ask the participant if they are familiar with the Tiffany's brand and if they have ever visited the website before. Ask the participant what their expectations are for the website. Ask the participant to complete a series of tasks on the website. Examples of tasks might include: finding a specific product, browsing a specific category, or purchasing a product. Observe the participant's behavior and ask follow-up questions to gain insight into their thought process. Ask the participant to navigate through the website and perform various actions, such as adding items to their cart, creating an account, or searching for products. Observe the participant's behavior and ask follow-up questions to identify any usability issues or frustrations they encounter.

Can Chat GPT provide good advice?

Well, not really! Chat GPT is great at searching for data and displaying it as though someone wrote it. It also great at mirroring your thoughts. But, it doesn’t verify information and it doesn’t have an opinion. For instance, in this exercise, when I answered some of the questions, I wrote that we could interview up to 20 people in the US, Europe and Asia. And that was it. Chat GPT never challenged that it told me that I should conduct the tests with 20 people in the US, Europe and Asia. What if I was wrong in the first place? And even if not, Europe and Asia are not countries so this is not precise enough. Remember that, from chat GPT’s point of view, you’re almost always right so it won’t tell you when you eventually aren’t. The AI doesn’t assess the information it gives back to you.

Which leads us to analysis. So far, it was difficult to run analysis with open AI because of the volume of data you had to submit. We’ll be seeing more and more hacks and solutions to analyze all the feedback you gathered. But the question remains, will the analysis be trustworthy?

Conclusion: the Good, the Bad, and the AI?

In a nutshell, Chat GPT can currently act as a very fast intern. It’s helpful but lacks the ability to challenge and some rigor; no offense to all past, present and future interns. To keep this article short, we also left out many other interesting steps we could have dug into, such as pre-qualification questionnaires or post-test questions. Let’s take a step back, because we, as humans, can:

The good

- You will get an initial draft of your protocol.

- You can be asked relevant questions that can really help conceive a better study.

- It feels good to have “someone” to talk to about the project. Even if you’re working in a team, you can start with Chat GPT before you finalize the job with your human counterparts. Strangely, I thought this element was the most valuable in the process.

The bad

- If you really chat with Chat GPT - which we advise - you don’t necessarily save time.

- You don’t get a ready-to-use protocol, you still need to amend at best, and possibly entirely rewrite some elements as we saw before.

- You still need to take a step back to make sure you’ve asked yourself all the right questions.

- Chat GPT doesn’t challenge your opinion and is even capable of inserting false leads.

- The effective scope of intervention is - for the moment - limited to what can be written, such as the research protocol. Even there, there are limitations, for example with the scales to be used with post-test questions.

The AI

Let’s not forget that AI solutions are evolving quickly so there soon might be more of the good and less of the bad, right? Yes, I did leave on purpose the ethics of AI but these are also real questions worth asking yourself, such as who owns the end conversations, what are the sources and should a real human do this job? Maybe the topic of another blog article. We’ll see.

Meanwhile, if you want to talk to real humans about your UX research projects, search no further and contact us 😊

All articles from the category: User research | RSS