January 23, 2019

How to get figures for your quantitative study or survey?

Qualitative studies have a secret friend, it's the quantitative survey. The latter allows, among other things, to obtain important figures for your decisions. But be careful not to produce third-rate figures with unreliable statistics with samples that are too small, for example 100 people for a large target group. Here are some keys to better design your quantitative studies.

The margin of error is defined by your sample size. Let's take the example of a question where 60% of participants answered "Yes". With a 10% margin of error, the true answer is between 50% and 70%. And you have no way of knowing what the true answer is, all the results in this example, between 50 and 70%, are true.

The problem arises when comparing results. Taking our example further, suppose we have 60% of "yes, I like apples" and 70% of "yes, I like pears" answers to the second one. The margin of error is 10%. It appears that the participants prefer pears, but the real "true" answers could be 70% for apples and 60% for pears, which would reverse the ranking.

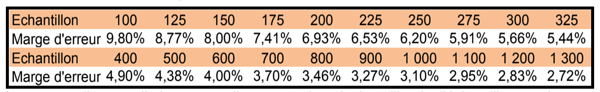

With 400 participants for a target, you get a margin of error of 4.90%, enough to form a solid sample (we go over the 5% mark at 377 participants). In addition, by surveying 300 people, you obtain a margin of error of 5.66%, which is still perfectly acceptable within the study standards. On the other hand, with 100 people, the margin of error is close to 10%, which is unacceptable.

If you’ve survived so far, congratulations! Please note that what we have just seen together is a simplified version. This includes - but is not limited to - the confidence interval which should be taken into account, a point we will leave aside for this article. We will now see some typical questions revolving around surveys.

For example, at how many people were enough UX problems related to an interface discovered? This is definitely a statistical question and the subject of our previous article "Is 5 users really enough?". Another very interesting article by Jeff Sauro, talks about the 9 misconceptions that can be encountered when we cross-reference user tests and statistics figures.

Do you wish to learn more about the available user research methods to better understand your users and test your products and services? Check out our quantitative survey page.

What are the key figures to keep in mind?

To guide you through this article, please find below the 3 orders of magnitude that will appear below:- With 800-1000 people, you can survey a nationally representative target and analyze sub-segments based on multiple criteria.

- With 300-400, you can survey a nationally representative target and analyze sub-segments on a limited number of criteria.

- With 100 people or less, you can only survey a specific target (bus drivers for example) and cannot segment.

Why are qualitative and quantitative opposed?

Let's start with a little background. Historically, there certainly has been opposition because one was based on statistical laws while the other originated in the field of social sciences, more precisely psychology. Since then, study systems have been able to overcome this opposition and develop a healthy balance between the two. Simply put, qualitative and quantitative studies do not answer the same question:- Qualitative answers the question why (and how): it is the kingdom of exploration, of open questions and the absence of preconceived ideas. We want to find out what we don't know. Therefore, the most common methods are interviews of all types, focus groups, but also diary techniques that inspire remote user tests.

- Quantitative answers how much: it outperforms in moderation, closed-ended questions, and generally doesn't allow discovering new hypotheses. We want to measurepre-identified phenomenawith figures. Methodologies range from traditional surveys to website or mobile apps analytical data analysis.

The key to quantitative is the margin of error

Now that the foundations are set, we will move on to the development of a solid quantitative survey. The one that will give you the figures to win your organization's buy-in on the launch of your latest website or app.The margin of error is defined by your sample size. Let's take the example of a question where 60% of participants answered "Yes". With a 10% margin of error, the true answer is between 50% and 70%. And you have no way of knowing what the true answer is, all the results in this example, between 50 and 70%, are true.

The problem arises when comparing results. Taking our example further, suppose we have 60% of "yes, I like apples" and 70% of "yes, I like pears" answers to the second one. The margin of error is 10%. It appears that the participants prefer pears, but the real "true" answers could be 70% for apples and 60% for pears, which would reverse the ranking.

"With a margin of error of 10%, if the result of a question is 60%, the true answer is between 50% and 70%"

Yes, but... how can we determine the sample size?

I have two pieces of good news:- It's possible to determine the size of your sample according to the desired margin of error.

- The study industry has set a standard on this subject, that is a margin of error of 5%.

With 400 participants for a target, you get a margin of error of 4.90%, enough to form a solid sample (we go over the 5% mark at 377 participants). In addition, by surveying 300 people, you obtain a margin of error of 5.66%, which is still perfectly acceptable within the study standards. On the other hand, with 100 people, the margin of error is close to 10%, which is unacceptable.

If you’ve survived so far, congratulations! Please note that what we have just seen together is a simplified version. This includes - but is not limited to - the confidence interval which should be taken into account, a point we will leave aside for this article. We will now see some typical questions revolving around surveys.

On the other hand, with 100 people, the margin of error is close to 10%, which is unacceptable in the case of a large target.

What targeting criteria for a quantitative study?

Targeting criteria have a strong impact on the way your sample is constructed. In the context of a very specific target, one can accept really reduced samples, 100 people or even as low as 30 on B2B features. This can be partly explained by budgetary constraints but also from a methodological perspective. Actually, a very specific target group might have less scope in its answers than a large population. This lower number of participants also prevents comparisons between sub-segments within the target population. In a nutshell, it is important not to have too many different profiles.How can my sample be representative of my population?

To be reflective of the population you wish to survey, make sure you have all the profiles that make up the population, and especially the right proportions. To this end, simply set up quotas on certain criteria. The ideal is not to fall below 80 people per sub-segment of analysis, which is commonly accepted in the statistical field (in B2C).So, does that mean that the numbers in qualitative samples are useless?

Well, no, they are actually useful! Numbers in qualitative studies are simply used differently. Namely, they help to prioritize problems and to get the first indicators in figures.For example, at how many people were enough UX problems related to an interface discovered? This is definitely a statistical question and the subject of our previous article "Is 5 users really enough?". Another very interesting article by Jeff Sauro, talks about the 9 misconceptions that can be encountered when we cross-reference user tests and statistics figures.

Do you wish to learn more about the available user research methods to better understand your users and test your products and services? Check out our quantitative survey page.

All articles from the category: User research | RSS