September 21, 2021

How to analyze the results of a user test?

Analyzing the results of a user test when you want to study a project or a new product is no easy task! Indeed, it is easy to get lost among all the information gathered, and to miss important points. But there are ways to use your data efficiently and unlock keys to success quickly. But what are we talking about exactly? To understand your usability test in peace we have listed in this manual all the key steps you will need. Decryption.

Summary:What is a user test?

Building a user study: the preparatory work

1: Define what you want to measure

2: Choose your test

3: Script your study

Analyze the results of a user test: the key steps

1: Stay objective

2: Organize and synthesize the results obtained

3: Cut the returns in stages

4: Add custom tags

5: Prioriitize

6: Illustrate the feedback

Understanding usability testing: the pitfalls to avoid

1: Focus on the individual rather than the entire sample

2: Not taking enough perspective

3: Consider only negative feedback

After the analysis: recommendations

What future for user test analysis?

What is a user test?

First, let's start by laying the foundations: auser test is an approach implemented to evaluate a product by having it tested by objective users. They are generally compensated for their participation in the study and access service or product testing missions, online or offline. For each mission, they follow steps via a test protocol and describe their experience giving positive and negative feedback. Each return is accompanied by a visual illustration: photo, screenshot, or video.

These tests can be done remotely or not, and in a moderated, non-moderated or, post-moderated fashion. In each case, they are carried out using scenarios established upstream.

This process is essential to realize the negative and positive points of a website, a new application, or a new product. Optimizing UX and increasing the conversion rate is one of the goals of user testing. Based on the needs, expectations, and challenges of your business, you must build the strategy and the course of your test step by step and then analyze its results .

Building a user study: the preparatory work

Understanding a usability test requires setting up a clear and rigorous methodology that begins as soon as your study is developed. Here is a list of the essential points to carry out your user study:

1: Define what you want to measure

Do you want to test a new mobile application, a website, a new product? Are you in the process of a site redesign, or simply, do you want to learn more about your users to better understand their needs? Knowing precisely what you want to measure is an essential first step for your study to be a success.

2: Choosing your test

Will your test be moderated, unmoderated, post-moderated? Although the analysis of the feedback is much the same, the study itself will run differently depending on your choice.

The moderated test takes place remotely or in person. Users and moderators can communicate and share their screens in real time. Participants should think out loud as they complete the list of activities they have been given. The moderator may intervene to ask for further details on certain actions, or to request more precise feedback during an individual interview.

The unmoderated user test only takes place at a distance, but this time the immersion is total, as no moderator communicates with the tester. The study is therefore more realistic, the results arrive faster, but it takes solid preparation so that the course is clear and the questions relevant.

At Ferpection, we like fine analysis and keep the best of each technique. For the user tests, we apply the post moderation and question the participants to ask them for details after the test. Thus, we ensure that the instructions are fully understood and the quality of our feedback.

3: Script your study

You have selected your needs and chosen your test. Now, you need to define the steps and the list of activities that the participants of your user test will have to answer. Establish questions that are open-ended but unambiguous. Be specific so that the user can provide you with relevant answers.

Analyzing the results of a user test: the key steps

Now that you have completed your study, how do you analyze the results of your user test ?

1: Stay objective

This is the basis for good analysis. To understand your usability test, it is important to detach your personal report from the study. Always be as objective as possible, and don't rely on "impressions", but on facts.

Put yourself in the shoes of user, and go through the experience step by step to retrace his journey and the difficulties he may have encountered. Be as factual as possible: for example, instead of saying the user couldn't find what was asked for, say where they clicked instead.

Do not focus on the negatives, but keep in mind that taking a step back is essential to keep a clear overview of your results.

2: Organize and summarize the results obtained

Once you have all the feedback in hand, it will be necessary to compile and organize them to bring out the main trends, the positives, and the sticking points. At Ferpection, we like tables which summarize the raw data collected. Thus, it is easy to quickly get an idea of the points raised by the testers, whether they are positive or not: percentage of problems, average scores , verbatims , user journey , etc. This initial information offers an interesting summary of the results of the experiment, but it is important to have a more detailed analysis of the data to understand your usability test. Make at least a table (Excel, Google Doc, etc.) with:

- the names of the participants

- the feedback and verbatim collected for each stage

- the average mark obtained for each activity

- the main positives and negatives of the test

- blocking points encountered during the stages

- areas for improvement

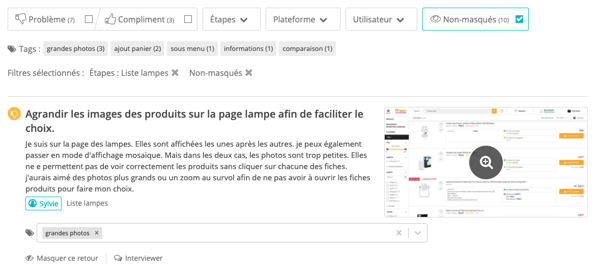

3: Break down returns in stages

The stages are markers retracing the path of the user throughout his navigation, essential during a remote test. This is the backbone of your script.

Looking at the feedback from each step allows information to be broken down, isolating it as needed to process it more efficiently. You can select the chosen step to have a more precise view of the feelings of the users. We recommend that you read the compliments first, then the issues. You can then highlight the good situations and the negative points of the selected step. Thanks to aux tags, you can then highlight blocking elements and priority returns.

4: Add custom tags

If the steps are defined beforehand, the custom tags must be created afterwards. They are used to show the main trends and occurrences which appear regularly (e.g., a lack of visibility on a page). They give statistics on how many times a difficulty occurs, and for how many testers. With these filters, you can classify and view issues much faster. You can also use them to communicate between teams, you will gain in efficiency. Indeed, a tag can quite designate a person or a service able to solve the problem.

5: Prioritize

It is therefore necessary to prioritize the problems according to their level of severity, but also to your ability to resolve. This will allow you to quickly identify a range of solutions and concrete actions to take to improve each step, whatever your needs (converting, strengthening the funnel of a sales funnel, or simply, get to know your customers better).

Set up an effective action plan by means of quick wins , strengthen your strengths to increase your added value, create your personas and feeding your roadmap will no longer be a problem for you.

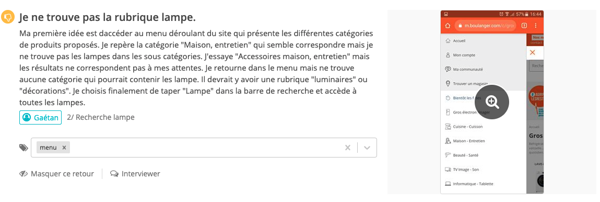

6: Illustrate returns

Finally, if you need to present these results in a meeting, be sure to illustrate user experiences (whether good or bad) with verbatims. This will demonstrate that the opinions communicated are those of the participants, and that this is not an individual feedback. A good practice which can help to keep the perspective necessary for a good understanding of the tests.

Understanding usability testing: the pitfalls to avoid

Some pitfalls await you along the way during your user analysis. Here is a list of them, and how to avoid them.

1: Focus on the individual rather than the entire sample

Some feedback media is more impactful than others. So, video feedback sometimes shows a very visible frustration that you can be sensitive to. Comments with strong words can also be more powerful. Yet, these are often just isolated elements.

In order not to fall into this trap, at Ferpection we systematically share the verbatims communicated by the testers. It is important to focus on the occurrence of a problem, rather than on an individual item.

2: Not taking enough perspective

There are sometimes internal issues that are taken to heart, because they are debated between different teams. In these cases, you may be tempted to focus on a particular point, especially if you are sensitive to the issue. However, don't forget that user testing is first and foremost user-centric, and that it serves an optimization strategy (be it in-store or remote testing, for launching a new product, a mobile application, a redesign, etc.). So look at the whole situation, without focusing on one point in particular.

3: Only consider negative feedback

You should also be careful not to focus solely on negative feedback, as the analysis of a user test also takes positive reports into consideration. Sometimes, obsessed with bad comments, we forget to realize that there are only 3 in number against 18 positive feedbacks that have been neglected.

In the analysis, the pleasant points highlighted can be further boosted to add value to UX. It is therefore important to determine how certain problems should be solved, but the test also allows you to prioritize the strong elements which are to be consolidated.

After the analysis: the recommendations

Once the analysis is complete, an expert in UX research can propose tailored recommendations to quickly resolve the biggest problems raised by the test. You then exit pure analysis and start to use the data obtained. These are the occurrences that will help determine how important a recommendation is, but also when the obstacle arises in the user experience. The earlier the difficulty arises, the greater the barrier.

What future for user test analysis?

In the age of automation where automatic transcriptions gain in finesse and relevance, semantic analysis is gradually emerging. Thanks to an algorithm, it would be possible to massively process all the data collected during user tests. Nevertheless, it is necessary to determine the time saving with regard to the loss of analysis quality. At Ferpection, we remain alert and continuously inform ourselves of the evolution of UX test analyzes.

If you need more advice and would like to benefit from personalized support, do not hesitate to call on our teams.

All articles from the category: User research | RSS